The notion that IQ tests measure some common substrate set of mental abilities that somehow add up to General Intelligence or the related "Spearman's g" has increasingly come under question:

"In the current study, verbal children with ASD performed moderately better on the RPM than on the Wechsler scales; children without ASD received higher percentile scores on the Wechsler than on the RPM. Adults with and without ASD received higher percentile scores on the Wechsler than the RPM. Results suggest that the RPM and Wechsler scales measure different aspects of cognitive abilities in verbal individuals with ASD..." https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4148695/

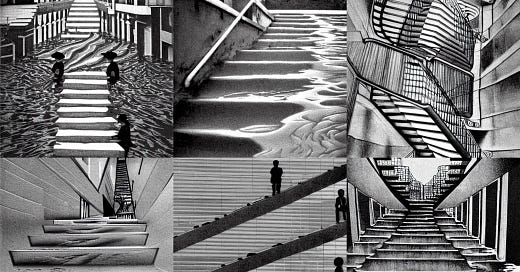

For the curious, here's a sample Raven's Progressive Matrices test.

Note that the linked site is apparently a coaching site, which indicates that pre-existing familiarity with the underlying principles can potentially confer a benefit. It's comparatively different from tests like the Stanford Binet or Weschler, which principally rely on "verbal" criteria (such as analogies) and differ from subject knowledge tests (such as "educational achievement tests") in being very difficult to crib for; there's no "teaching the IQ test", at least if it's a Weschler. "Cultural bias" on verbal IQ tests is a euphemism for "lack of scholastic skills", which often shows up in conjunction with "lack of practice with scholastic skills." There's no real way to weight that factor, or to precisely identify its influence on Weschler test performance. But if someone has a low set of scholastic skills- i.e., they read, write and do basic math poorly, if at all- they're at a substantial disadvantage.

That's what Raven's Progressive Matrices "general intelligence test" was designed to remedy: the RPM is said to be "culture neutral", which is to say that the test is presented in the form of abstract shapes, with the Correct answer chosen on the basis of assenting to and following the rules ordained to provide a Uniform Correct result. The skill set being measured is what the researchers refer to as "fluid intelligence".

"Raven's Progressive Matrices (often referred to simply as Raven's Matrices) or RPM is a non-verbal test typically used to measure general human intelligence and abstract reasoning and is regarded as a non-verbal estimate of fluid intelligence.[1] It is one of the most common tests administered to both groups and individuals ranging from 5-year-olds to the elderly.[2] It comprises 60 multiple choice questions, listed in order of increasing difficulty.[2] This format is designed to measure the test taker's reasoning ability, the eductive ("meaning-making") component of Spearman's g (g is often referred to as general intelligence)..."

Now there's an interesting finding: "This format is designed to measure the test taker's reasoning ability, the eductive ("meaning-making") component of Spearman's g (g is often referred to as general intelligence)..."

But I thought that Spearman's "g" was a Monolithic quality, yes? So how is the ability measured said to be a "component", and granted to serve as a proxy measurement for "Spearman's g"?

Logically, the only way that probity could be validly asserted for the "component" in question--the "eductive" capacity-- is if the particular measured ability has a holographic character, isomorphic with the wider overall intelligence quotient ("IQ")--i.e.,"general" intelligence. If measuring this one particular component of mental ability--"intelligence"--is sufficient to serve for a conclusive evaluation about the whole.

Which it plainly doesn't.

"... We therefore assessed a broad sample of 38 autistic children on the preeminent test of fluid intelligence, Raven's Progressive Matrices. Their scores were, on average, 30 percentile points, and in some cases more than 70 percentile points, higher than their scores on the Wechsler scales of intelligence..." https://pubmed.ncbi.nlm.nih.gov/17680932/

To review: the commonly used nomenclature for the mental abilities measured by the RPM: "abstract reasoning">>"abstract intelligence";>>"fluid intelligence">>"reasoning ability">>"eductive intelligence." Eductive Intelligence.1 The faculty held as probative for assessing General Human Intelligence--"IQ"--as ranked on a linear scale. I don't think that holds up under scrutiny. Abstract intelligence is an aspect of intelligence. Not to be discounted, but not the only one that counts.

The RPM has a particular way of testing the faculty that it refers to as "abstract reasoning" and "fluid intelligence." The test presents puzzles at levels of increasing difficulty. Essentially, the same question is asked over and over again,to an increasingly more challenging degree. I have no problem assenting to the view that the RPM measures some ability or another- but I'm skeptical that what's measured translates to an accurate measure of "General Intelligence."

I used to take the concept of "general intelligence" for granted, but I've grown to be skeptical that Spearman's summed monolithic value "g" even exists. By contrast, I find Gardner's structural frame of "multiple intelligences" to be persuasive. https://www.verywellmind.com/gardners-theory-of-multiple-intelligences-2795161

Reframing the question of human intelligence as having multiple aspects--often at disparate levels within the same individual--makes more sense to me. One serious downside of the multifactoral hypothesis is that assessing and ranking each discrete ability with tests is a goal that's somewhere between "elusive" and "almost certainly impossible." (Whereas the RPM is a test examiners dream. A cynic might even express the opinion that the RPM satisfies the requirements of the examiners better than it does the test subjects, and that this feature accounts for its enduring popularity in the intelligence test industry.) But it's difficult to deny the ordinary good sense of Gardner's taxonomy of intelligence abilities:

Visual-spatial

Linguistic-verbal

Logical-mathematical

Bodily-kinethetic

Musical

Interpersonal

Intrapersonal

Naturalistic

(There's probably some validity to the objection that--considered in isolation--"body-kinethesthetic" ability is more related to the cerebellum and nervous system than it is to the "higher centers." But all of the other abilities principally rely on cerebral functions.)

The counter-argument made by the defenders of Spearman's "g" is the "two-factor" hypothesis, which accounts for the 7-8 distinct abilities posited by Gardner by asserting that all of them rely on the same monolithic base of General Intelligence, and that the most "Generally Intelligent" are also the most likely to demonstrate excellence in multiple fields of intellectual endeavor.

My counter to that argument is that it's a claim offered without any support, other than the occasional anecdote- a position handily undermined all of the anecdotal evidence that contradicts it. Spearman's g implies that those who measure highest on intelligence tests are able to demonstrate the broad-based excellence of their abilities at the entire array of skill sets. Authentic polymaths are actually quite rare. I'd venture that high-functioning verbal autistics are a much larger group population.

1"Eductive" is an interesting word to me, especially considering I didn't know there was any such word until less than an hour ago. I had heard the phrases "deductive", and "inductive", yes- but "eductive" is a new one on me. The word has some varied definitions.

According to the OED, the term appears in data mining survey only rarely; it's considered obsolete, or anyway superfluous: "Eductive means relating to education or learning, or derived from education. It is an obsolete word that comes from Latin eductivus..." [ copied from search results; the OED doesn't let anyone past the first page without paying. ] https://www.oed.com/dictionary/eductive_adj

The Century Dictionary says "tending to educe or draw out." https://www.wordnik.com/words/eductive

Which leads us to the word "educe", for which the Cambridge Dictionary gives the meaning as '1.to obtain information: ex. "The government is not relying on any evidence educed from this process." 2. to develop something or make it appear. ex. "Experience empowers students by educing the power that they already possess."' https://dictionary.cambridge.org/us/dictionary/english/educe

(I thought students empowered lived experience, by assembling an inductive basis with occasional forays into logical deduction. But, hey, I’ll give the “eductive” idea a chance.

the American Heritage Dictionary has: 1. to draw or bring out, syn. "evoke". 2.To infer or work out from given facts. "educe principles from experience." https://www.wordnik.com/words/educe

I find that definition of "educe" intriguing, because it sounds like it's the same means of processing input as that employed by AI algorithms: AI harvests data (massive amounts of it) from the Internet, and then draws inferences on the basis of pattern matching in order to sort the data more productively and appropriately, and hopefully with an improved signal in response with the human in communication with the AI, prompting it with requests. It’s been programmed with an ability to draw abstract inferences, and then to get better at doing it.

But AI "learning" programs show no indication of harboring General Intelligence. No matter how competently they perform on "intelligence tests", and how much they're improving. I applaud the improvement. But I applaud the achievements of electronic calculators, too. Their facility with math- and with memory functions- far exceeds my own. But neither a calculator or an AI learning algorithm is even awake, much less "intelligent." They're wonderfully egoless machines with no agenda of their own. But they also go astray at times, sometimes by picking up biases and prejudices in their media input. (I blame Societys.) And their facility at learning and synthesizing extracted information is not to be confused with any basis to claim that they've achieved "General Intelligence", either on human terms or as machines.

So how is it that the results of the RPM--a test that relies on only a very narrow concept of intellectual skill- get to stand in as a proxy for human general intelligence- also known as IQ, Intelligence Quotient?

It’s also worth noting that AI programs are frequently known to run afoul of practical ground-level reality—an experience level utterly absent from its notice—a phenomenon known as hallucination. Ironically, abstractions drawn from human reasoning partake of a similar vulnerability to error and inaccuracy- one that arguably exceeds the problems found in AI. As with, for example, the dogmatic application of the abstract reasoning theories commonly referred to as “ideologies.” To name only two of the most well-known: how well do idealized abstractions like Marxism and Objectivism perform, when applied to real-world economic conditions? As blackboard theory, the abstract reasoning of ideologies describe an ideally functioning abstraction. An educed construction, working in accord with flawless mechanistic principles.

Not only is the real world a messier place, the human imagination is much wider than the linear progression intended by a RPM test question.

Ref. Raven's Progressive Matrices Text summarizing the theory and principles of test design

Excerpt (to view the numbered diagrams and read the numbered endnotes, refer to the full text version in the link above)

“…It is the strength of people’s desire to make sense of such “booming, buzzing, confusion” (and their ability to do so) that Raven’s Progressive Matrices (RPM) tests set out to measure – and, as we shall see, to some extent, do measure.

Some people have difficulty seeing even the rectangle in Figure 1.4. Some see immediately the whole design in Figure 1.6 and its internal logic. Most people need to look at the design in Figure 1.6 and ask “What is it?”; “What might it be?”; “Does this part here tell me anything about what the whole might be?”; “Does this glimmering insight into what the whole might be tell me anything about the significance of this part?”

More specifically, the tests set out to measure meaning-making – or, more technically, ‘eductive’ – ability.

This involves the use of feelings to tell us what we might be looking at; which parts might be related to which other parts and how. Which parts beckon, attract, give us the feeling that we are on to something? To construct meaning effectively we also need to persist over time and check our initial hunches or insights…”

“And here is the dilemma – for if “cognitive activity” is a difficult and demanding activity having multiple components, no one will engage in it unless they are strongly intrinsically motivated to carry out the actions which require it…”

“While such observations underline the pervasive importance of eductive ability, they also bring us face to face with a fundamental conceptual and measurement problem. They raise the question of whether effective panel beaters, football players, and musicians all think– set about trying to generate meaning –“in the same way” in these very different areas. Certainly they rarely think in words.

So, at least at the present time, it would appear that, while they are clearly onto something important, it is misleading for people like Gardner1.5 to speak of different kinds of intelligence. It seems that the components of competence required to make meaning out of the booming, buzzing, confusion in very different areas are likely to be similar. But they will only be developed, deployed, and revealed when people are undertaking these difficult and demanding, cognitive, affective, and conative activities in the service of activities they are strongly motivated to carry out and thus not in relation to any single test – even the RPM!…”

“What, then, do the Raven Progressive Matrices tests measure? They measure what many researchers have called “general cognitive ability” – although this term is misleading because what the RPM really measure is a specific kind of “meaning making” ability. Spearman coined the term eductive ability to capture what he had in mind, deriving the word “eductive” from the Latin root educere which means “to draw out from rudimentary experience”. Thus, in this context it means “to construct meaning out of confusion”.

It is, however, important to note that Spearman elsewhere1.9 noted that the range of tests from which his g – and with it “eductive” ability –had emerged was so narrow that one would not be justified in generalising the concept in the way that many authors do…”

“We may now revert to our main theme: Our intention when embarking on the methodological discussion we have just completed was to begin to substantiate our claim that “eductive ability” is every bit as real and measurable as hardness or high-jumping ability. The advance of science is, above all, dependent on making the intangible explicit, visible, and measurable. One of Newton’s great contributions was that he, for the first time, elucidated the concept of force, showed that it was a common component in the wind, the waves, falling apples, and the movement of the planets, and made it “visible” by making it measurable. Our hope is that we have by now done the same thing for eductive, or meaning-making, ability. Our next move is to show that the conceptual framework and measurement process is much more robust and generalisable than many people might be inclined to think…”

“Raven was a student of Spearman’s. It is well known that Spearman1.19 was the first to notice the tendency of tests of what had been assumed to be separate abilities to correlate relatively highly and to suggest that the resulting pattern of intercorrelations could be largely explained by positing a single underlying factor that many people have since termed “general cognitive ability” but to which Spearman gave the name “g”. It is important to note that Spearman deliberately avoided using the word “intelligence” to describe this factor because the word is used by different people at different times to refer to a huge range of very different things1.20. (As we have seen, even the term “general cognitive ability” tends to have connotations about which Spearman had severe doubts.)

It is less well known that Spearman thought of g as being made up of two very different abilities which normally work closely together. One he termed eductive ability (meaning making ability) and the other reproductive ability (the ability to reproduce explicit information and learned skills). He did not claim that these were separate factors. Rather he argued that they were analytically distinguishable components of g. Spearman, like Deary and Stough1.21 later, saw this as a matter of unscrambling different cognitive processes, not as a factorial task. Whereas other later workers (e.g. Cattell1.22, Horn1.23, and Carroll1.24) sought to subsume these abilities into their factorial models, Spearman deliberately avoided doing so. Thus he wrote: “To understand the respective natures of eduction and reproduction – in their trenchant contrast, in their ubiquitous co-operation and in their genetic inter-linkage – to do this would appear to be for the psychology of individual abilities the very beginning of wisdom.”

In addition to developing the Progressive Matrices test,J. C. Raven therefore developed a vocabulary test – the Mill Hill Vocabulary Scale (MHV) – to assess the ability to master and recall certain types of information.

At root, the Mill Hill Vocabulary Scale consists of 88 words (of varying difficulty) that people are asked to define. The 88 words are arranged into two Sets. In most versions of the test, half the words are in synonym-selection format and half in open-ended format. Although widely used in the UK, this test has, for obvious reasons, been less widely used internationally. Yet this test, which can be administered in five minutes, correlates more highly with full-length “intelligence” tests than does the Progressive Matrices…”

above excerpted from https://www.researchgate.net/publication/255605513_The_Raven_Progressive_Matrices_Tests_Their_Theoretical_Basis_and_Measurement_Model

Effect of shortened version of Raven on self-rated “fatigue” and “motivation”- and also performance results https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10144826/

Sex differences and Raven’s: https://www.sciencedirect.com/science/article/abs/pii/S0191886903002411

Ref. Wechsler https://www.wikijob.co.uk/aptitude-tests/iq-tests/wechsler-iq-test